-

Discussion about looping in llama.cpp: https://github.com/ggerganov/llama.cpp/pull/5561

-

By @kalomaze, sampling from the original distribution avoids looping in almost all cases (1.0 Temperature with nothing else)

- but when you layer things like lower temperature + Top P + etc then that’s when the problem is usually introduced.

- However, you sort of need truncation (or just lower Temp by itself) to keep the model coherent.

- Min-P tries to allow the model to stay “sane” at high temperature, while avoiding sampling from the tail-end of the distribution

Repetition penalties

-

This is a technique that applies a small negative bias to all tokens that have appeared so far to avoid repetition. 1.0 implies no change to the scores, while 1.25 is considered somewhat extreme.

-

Three types of penalties

repeat_penalty(multiplicative, independent of frequency)present_penalty(additive, independent of frequency)freq_penalty(multiplicative, scales with frequency)- How they work:

- Create a frequency map to count occurrences of each token

if (candidates->data[i].logit <= 0) {

candidates->data[i].logit *= penalty_repeat;

} else {

candidates->data[i].logit /= penalty_repeat;

}

candidates->data[i].logit -= float(count) * penalty_freq + float(count > 0) * penalty_present;

- Those samplers are rather blunt instruments that distort the grammar of standard language, which the model has been painstakingly trained to reproduce.

DRY

-

https://github.com/oobabooga/text-generation-webui/pull/5677

-

DRY penalizes tokens that would extend the end of the input into a sequence that has previously occurred in the input.

-

Example:

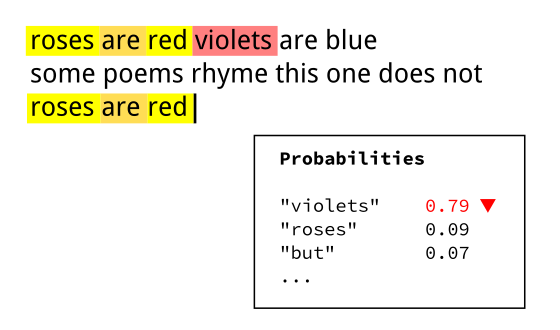

- In this example,

violetsis penalized in the probability distribution generated by the model because the sequenceroses are redhas previously occurred in the input, and has been continued withvioletsin that previous case.

-

The penalty for a token is defined as

multiplier * base ^ (n - allowed_length)- e.g.

multiplier=0.8,base=1.75andlength=2

- e.g.

- where

nis the length of the sequence before that token that matches the end of the input, andmultiplier,base, andallowed_lengthare configurable parameters. If the length of the matching sequence is less thanallowed_length, no penalty is applied.

-

Thus the penalty grows exponentially as the repeated sequence gets longer.

- This will quickly overcome even the strongest tendency of the model to repeat itself. With the right parameter choice, looping is literally impossible with DRY

-

The DRY penalty should be applied before any truncation samplers.

Entropy

- One can also potentially detect when a model is looping by keeping track of the entropy

- If the entropy of the distribution is too low (according to a set threshold), one can noise the logits to get the model out of its “local optima”

- More details in Entropy-based sampling