-

nanotron.distributedfile- wrapper around torch.distributed

- they use

@cachedecorator forget_ranktype callsget_global_rankcache has a speedup of 4 tflops on a 7b model

get_rank(group)gives the “local” rank of a process within the given groupget_global_rank(group, group_rank)gives the global rank given the local rank within a given group-

Is this correct? I though rank was unstable, given nodes can fail? Depends on how failure is handled

-

nanotron.trainerfile- Defines DistributedTrainer

- can log the throughput by setting env variable “NANOTRON_BENCHMARK”=1

_init_model()- init RoPE

- builds the model using

build_model() make_ddp = DP > 1 and not(grad_accum_in_fp32 and zero_stage>0)model = DistributedDataParallel(model, process_group=parallel_context.dp_pg, broadcast_buffers=False, bucket_cap_mb=config.model.ddp_bucket_cap_mb)- bucket_cap_mb –

DistributedDataParallelwill bucket parameters into multiple buckets so that gradient reduction of each bucket can potentially overlap with backward computation.bucket_cap_mbcontrols the bucket size in MegaBytes (MB). (default: 25) - broadcast_buffers (bool) – Flag that enables syncing (broadcasting) buffers of the module at beginning of the

forwardfunction. (default:True)

- bucket_cap_mb –

- Defines DistributedTrainer

-

nanotron.modelsmodule- defines the

NanotronModelclass- contains its parallel context

input_pp_rankandoutput_pp_rank

build_model()- first get

model = model_builder()e.g. LLama definition - gets all model chunks and defines the pipeline

- computes compute cost to balance compute across PP blocks

- assigns pipeline blocks to a given rank/process according to computed assignment

- sequential assignment ⇒ assumes G-Pipe or 1F1B, doesn’t work with interleaved 1F1B

- first get

- defines the

-

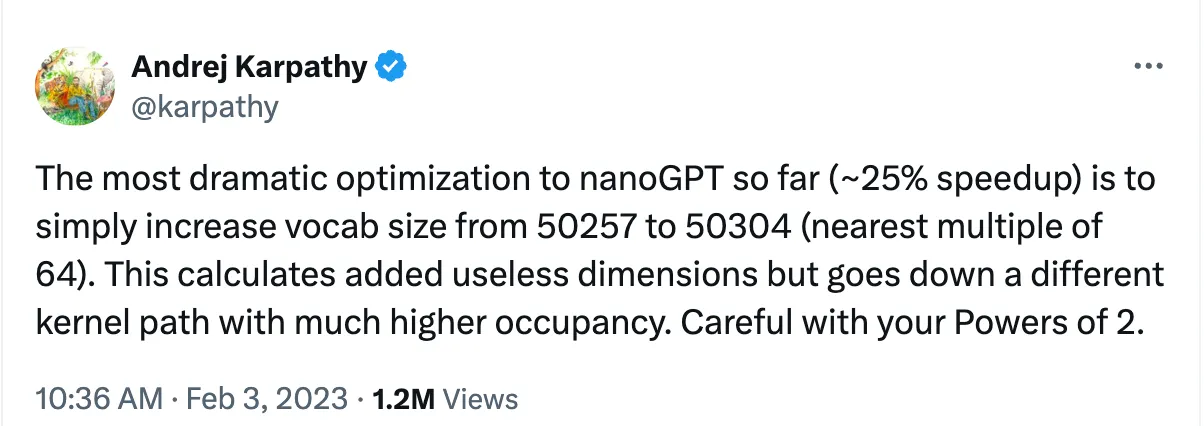

nanotron.helpersfile_vocab_size_with_padding(orig_vocab_size: int, tp_pg_size: int, make_vocab_size_divisible_by: int)- Pad vocab size so it is divisible by pg_size make_vocab_size_divisible_by

- pretty important!

-

nanotron.parallelmodule-

contextfile- Defines

ParallelContext- holds the 3D parallelism process groups definitions

- Only nccl backend is supported for now. :(

- For TPUs, you actually need to use XLA (Accelerated Linear Algebra)

torch_xla.distributed.core.xla_model.mesh_reduce("loss, np.mean)instead oftorch.distributed.reduce(loss, op=torch.distributed.ReduceOp.SUM) - The reason is that many backends (except nccl) don’t support

reduce_Scatter, to emulate the behaviour, you need to useAlltoAllwith asum(), which is expensive. (https://github.com/pytorch/pytorch/blob/2b267fa7f28e18ca6ea1de4201d2541a40411457/torch/distributed/nn/functional.py#L317)

- For TPUs, you actually need to use XLA (Accelerated Linear Algebra)

- AMD has their equivalent of

nccl, calledrccl, which does supportreduce_scatter! - has an cryptic piece of code to create the 3D parallelism process groups

_init_parallel_groups()- rewrote it to be clearer :)))

- Defines

-

data_parallel.utilsmodule- e.g.

sync_gradients_across_dp

- e.g.

-

pipeline_parallelmoduleenginefile- contains the

PipelineEngine- we have

AllForwardAllBackwardPipelineEngine(a.k.a G-Pipe) - we have

OneForwardOneBackwardPipelineEngine(a.ka. 1F1B or Pipe-Dream)

- we have

- the

TensorPointerdataclass- Dataclass specifying from which rank we need to query a tensor from in order to access data

- contains the

utilsfile- defines

get_input_output_pp_ranks(model)- to know which ranks to feed the dataloader to

- defines

-

tensor_parallelmodule- not clear whether sequence parallelism is actually supported

- sequence parallel == TensorParallelLinearMode.REDUCE_SCATTER ?

- (first sync) is an all-gather operation along the sequence dimension in the forward pass, and reduce-scatter in the backward pass

- (second sync) is a reduce-scatter in the forward pass, and all-gather in the backward pass

- Classic TP is TensorParallelLinearMode.ALL_REDUCE

- (first sync) is an identity (or splitting) in the forward, and an all-reduce in the backward

- (second sync) is an all-reduce in the forward where the matrix are aggregated by summing, identity (or splitting) in the backward

functional.pyfile- defines

column_linear,row_linearand their async counterparts

- defines

-

The parameters files

parametersfile- Defines the base class for all parameters in Nanotronmodels

NanotronParameter(inherites from torch.nn.Parameter)- each parameter has metadata (a dict)

- attribute_name

- tied_parameter info

- sharded_parameter info

- each parameter has metadata (a dict)

- Defines the base class for all parameters in Nanotronmodels

sharded_parametersfile- methods for sharding

- given a torch.nn.Parameter, a process group, and a split config

- returns a sharded NanotronParameter

- given a torch.nn.Parameter, a process group, and a split config

- methods for sharding

tied_parametersfile

-

-

nanotron.utilsmodule- Includes

main_rank_firstcontext

- Includes

-

nanotron.configmodule- Includes all definitions of args

- for data, parallelism, model,

- the method

get_config_from_file

- Includes all definitions of args

-

nanotron.dataloaderfile- Includes

clm_process(causal language modeling preprocessing)get_datasets(get datasets from hf)get_train_dataloader()

- Includes