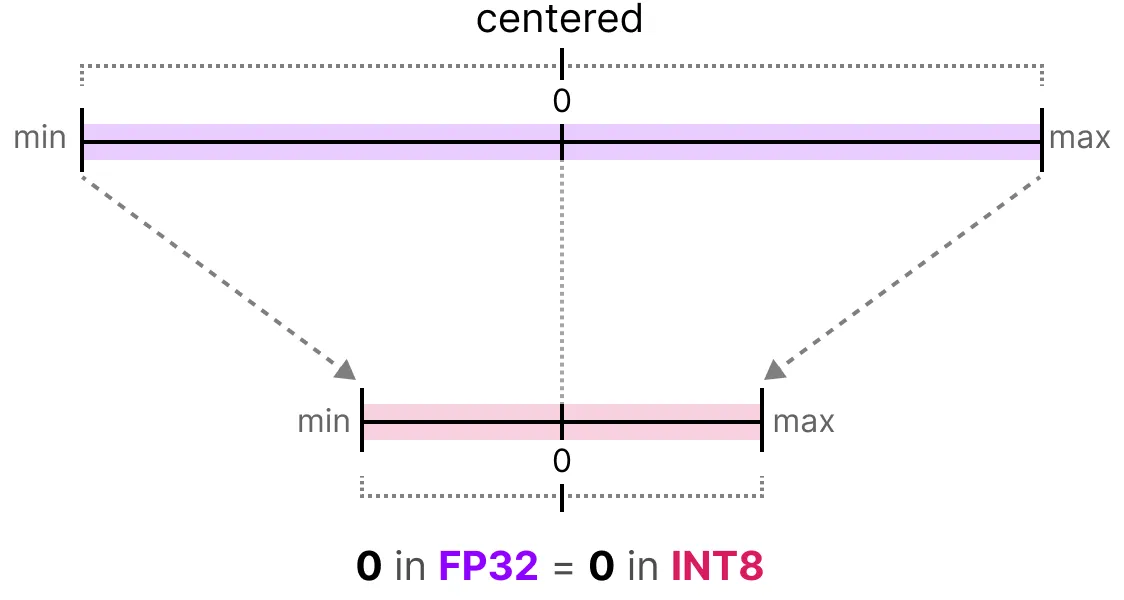

Symmetric quantization

- Quantized value for zero = full precision zero

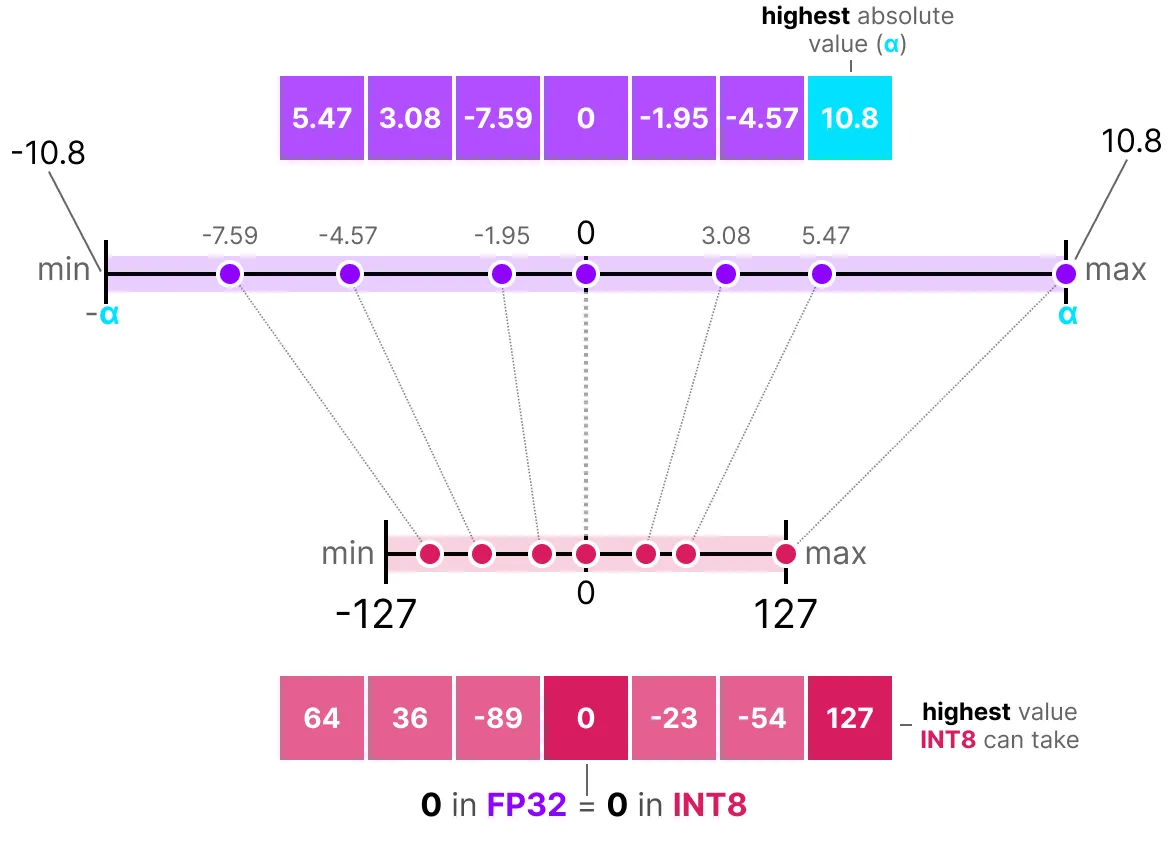

Absolute maximum (absmax) quantization

- Given a list of values, we take the highest absolute value, , as the range to perform the linear mapping

- e.g. FP32 → INT8

- e.g. FP32 → INT8

- = max representable value of your format

- scale factor

- quantization error = (in original precision)

Asymmetric quantization

- It maps the minimum () and maximum () values from the float range to the minimum and maximum values of the quantized range.

- Example with zeropoint quantization

- = max representable value of your format

- = max representable value of your format

- scale factor

- zeropoint

-

- e.g. gets mapped to

- e.g. gets mapped to

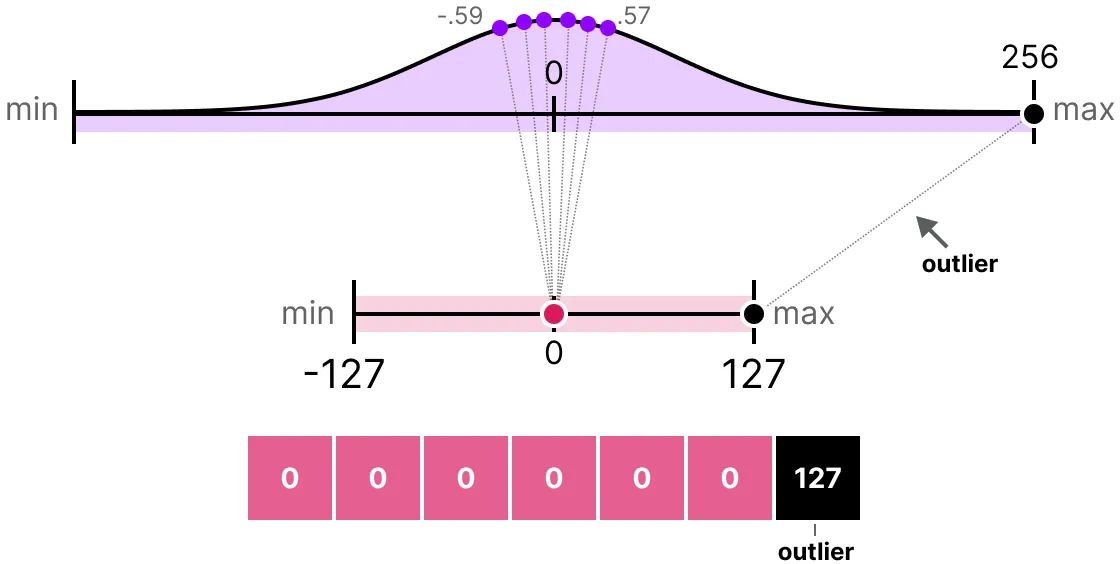

Outliers

Clipping

-

If your vector has an outlier, then using “naive” quantization can lead to most values being mapped to the same spot in the band

-

We need to clip the outlier e.g. in FP32

-

How do you choose the clipping range?

-

For weights and biases

- Manually choosing a percentile of the input

- Optimize the mean squared error (MSE) between the original and quantized weights.

- Minimizing entropy (KL-divergence) between the original and quantized values

-

For activations

- Unlike weights, activations vary with each input data fed into the model during inference, making it challenging to quantize them accurately.

-