- Paper by Wei

- Standard few-shot prompting: <input,output>

- Chain of thought prompting: <input, thought, output>

- extremely data-efficient for a given task (requires very few samples)

Self-consistency (SC)

- “Self-consistency improves chain of thought reasoning in language models.”

- The self-consistency method contains three steps:

- (1) prompt a language model using chain-of-thought (CoT) prompting;

- (2) replace the “greedy decode” in CoT prompting by sampling from the language model’s decoder to generate a diverse set of reasoning paths;

- (3) marginalize out the reasoning paths and aggregate by choosing the most consistent answer in the final answer set.

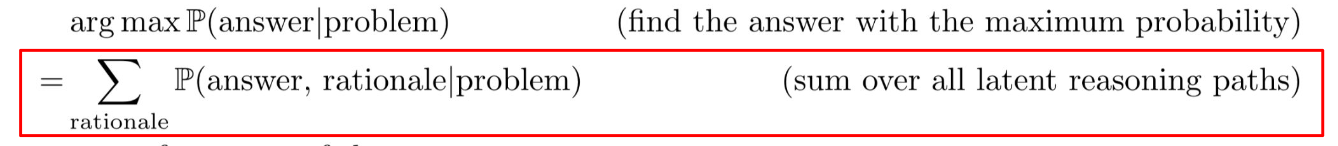

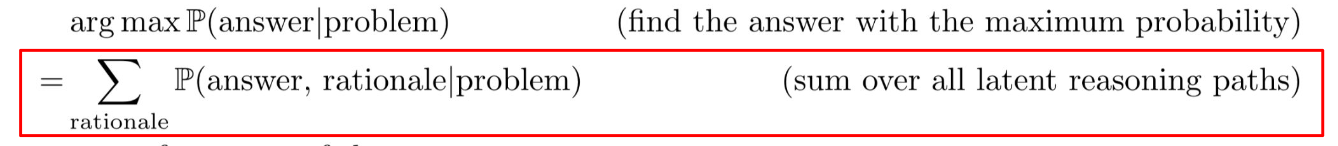

- We need marginalization because the text= “rationale+answer”, so greedy decoding is wrong.

- When there is no reasoning path, we don’t need self-consistency, since we can directly choose the most likely answer using P(Y|X)

Universal Self-Consistency (USC)

- Just ask LLMs to select the most consistent response based on majority consensus

- USC consistently improves the performance on free-form generation tasks, like summarization, where SC is inapplicable

Least-to-most prompting

- Decompose a complex problem into a list of easier subproblems

- Sequentially solve these subproblems (from least to most complex)

- Least-to-Most Prompting = Planning/Sketch + Reasoning