- First paper: “Finetuned language models are zero-shot learners.”https://arxiv.org/abs/2109.01652

-

Second paper: “Scaling instruction-finetuned language models”https://arxiv.org/abs/2109.01652 (more scaling, more tasks, CoT instruction datasets, )

- “Instruction tuning” finetunes a language model on a collection of NLP tasks described using instructions

- Instruction tuning helps the model perform tasks it wasn’t trained on, giving the model a range of applications.

- improves the zero-shot performance of language models on unseen tasks

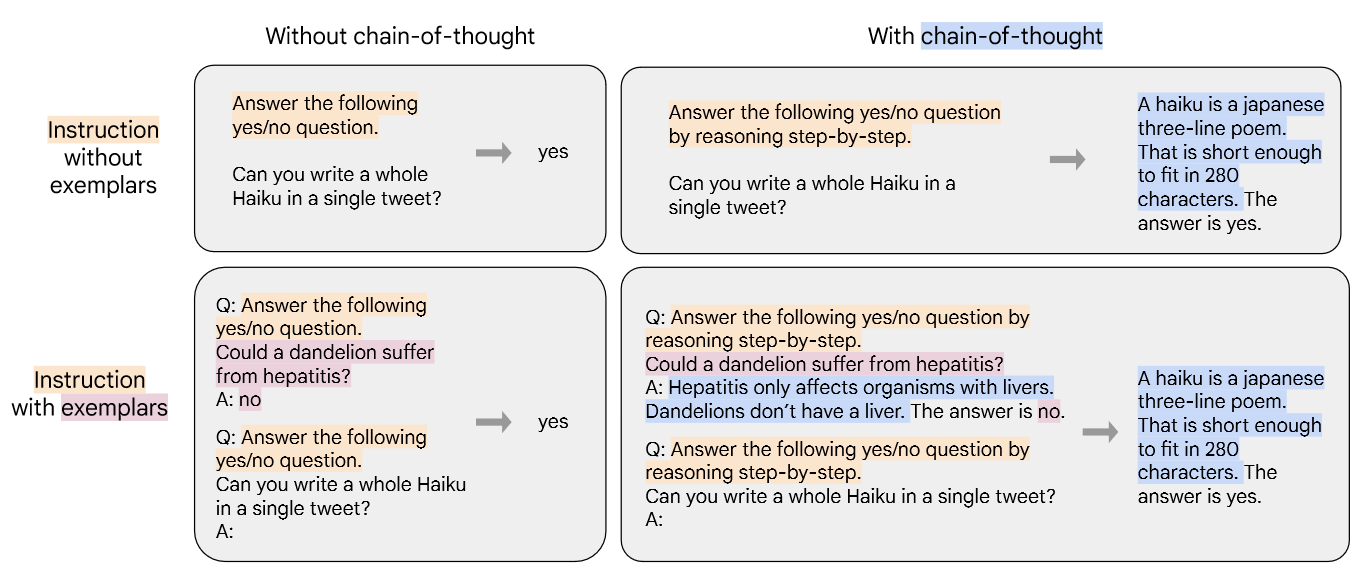

- (Talking about including CoT data or not) Instruction finetuning improves unseen tasks when the unseen tasks are in the same prompting paradigm as the finetuning tasks

Data Composition

FLAN-1

-

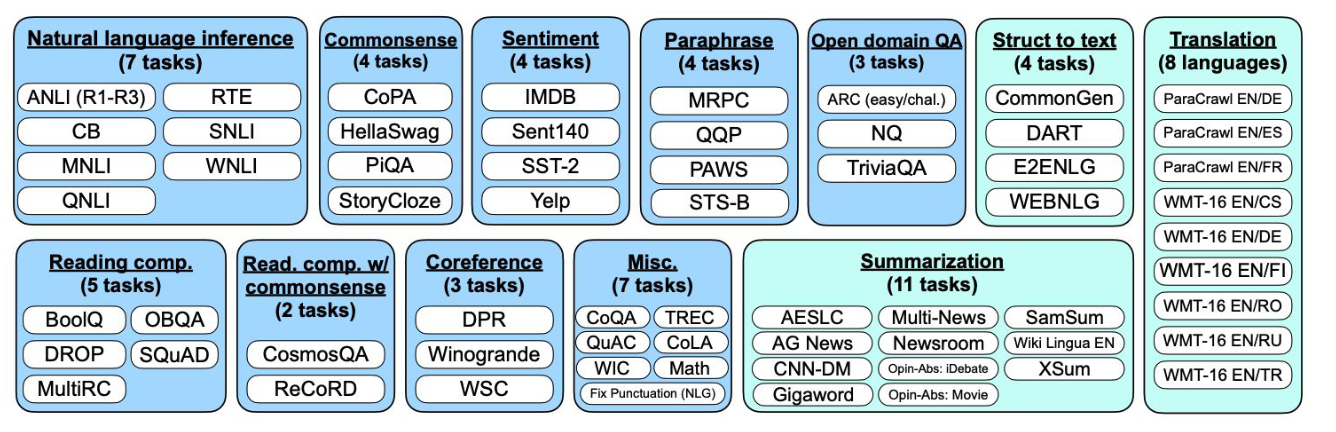

62 NLP Datasets

-

12 “task clusters”

-

Natural Language Inference, Reading Comprehension, and Open-Domain are not part of training!! Used to test zero-shot abilities.

-

They used templates to create the format of the data ⇒ curiously, more templates per dataset did not help much.

FLAN-2

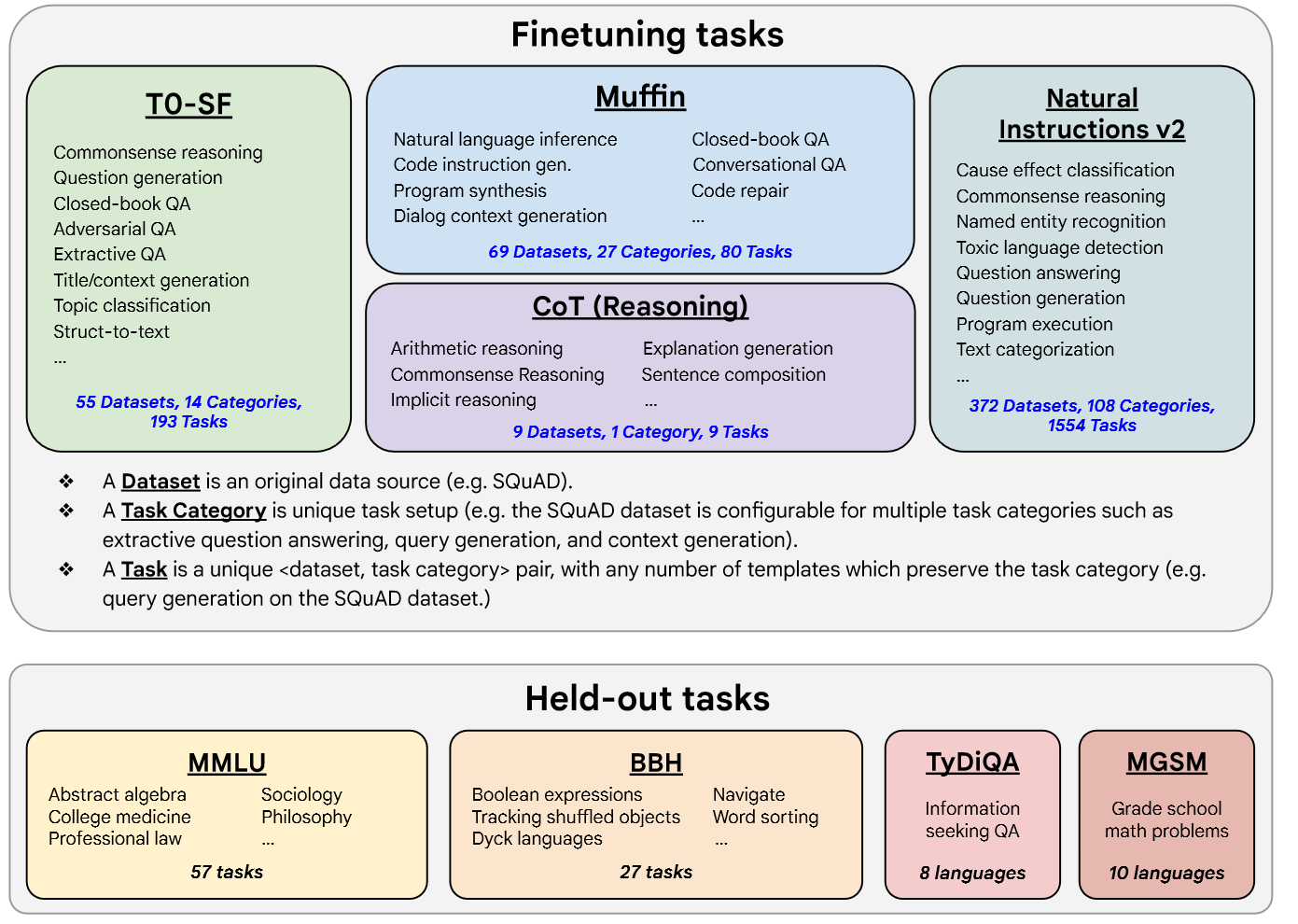

- 473 datasets, 146 task categories, 1836 total tasks.

- Muffin = Multi-task finetuning with instructions.

- T0-SF = tasks from SF that do not overlap with Muffin (SF stands for “sans Flan”)

- Niv2 = Natural-Instructions_v2 from “Benchmarking Generalization via In-Context Instructions on 1,600+ Language Tasks”

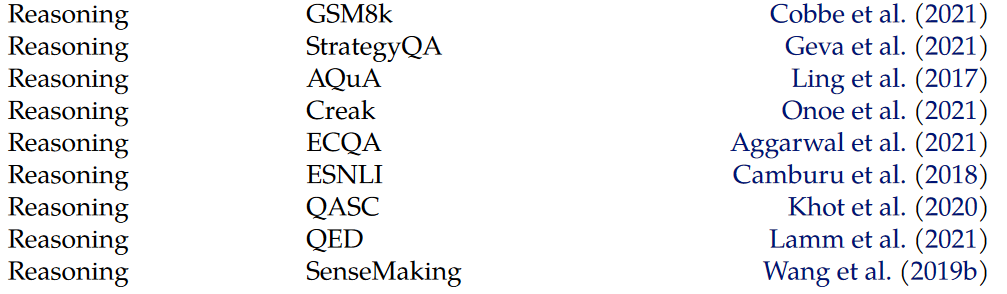

- CoT ( prior work for which human raters manually wrote CoT annotations for a training corpus.)

Data preparation

- Using natural instructions (e.g., “Please translate this sentence to French: ‘The dog runs.’”) instead of the raw data e.g. ”“[Translation: WMT’14 to French] The dog runs.”” is necessary. Formatting matters a lot.

- FLAN-1

- Adding few-shot exemplars to FLAN is a complementary method for improving the performance of instruction-tuned models. Standard deviation among templates is lower for few-shot FLAN, indicating reduced sensitivity to prompt engineering

- FLAN-2 (adds CoT)

How to handle classification tasks

- FLAN-1 include an options suffix, in which we append the token OPTIONS to the end of a classification task along with a list of the output classes for that task. This makes the model aware of which choices are desired when responding to classification tasks.

Data mixing

- FLAN-1

- Our instruction tuning pipeline mixes all datasets and randomly samples from each dataset. To balance the different sizes of datasets, we limit the number of training examples per dataset to 30k and follow the examples-proportional mixing scheme (Raffel et al., 2020) with a mixing rate maximum of 3k.

- FLAN-2

Examples-proportional mixing

- prevent overfitting to a single task which has more data

- Specifically, if the number of examples in each of our N task’s data sets is , n ∈ {1, … , N } then we set probability of sampling an example from the mth task during training to where K is the artificial data set size limit. This sets a maximum size of for each task.

- we find that for most tasks there is a “sweet spot” for K where the model obtains the best performance, and larger or smaller values of K tend to result in worse performance

Scaling laws

- FLAN-1: For unseen tasks, instruction tuning improves performance only with sufficient model scale (around 7B) ⇒ need to be careful for performance with smaller models

Prompt tuning

- Check prompt tuning in the paper (just append soft tokens to prompt, to be optimized for a given downstream task (frozen model))